Render Development Update

on March 9th, 2010, by BrechtAs mentioned in my last blog post, there were four big changes planned, shading system refactor, indirect lighting, per tile subdivision and image tiled/mipmap caching. As usual, in practice those plans can change quickly in production. So here’s an update on the plans and some results.

For indirect lighting, the intention was to use a point based method. I found the performance of these quite disappointing. The micro-rendering method works well only with low resolution grids, but this is noisy, and when increasing the resolution suddenly performance is not as good any more. That leads to the Pixar method which is somewhat more complicated. This can scale better, but the render times in their paper aren’t all that impressive, in fact some raytracers can render similar images faster.

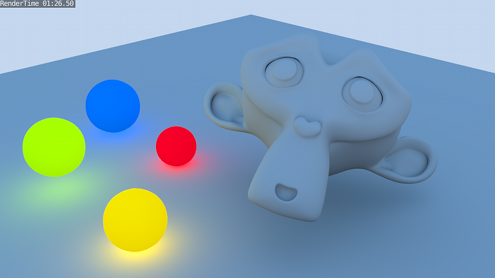

Indirect lighting test with sky light and emitting materials

So, I decided to try out raytracing low resolution geometry combined with irradiance caching. With the new and faster raytracing code this seems to be feasible performance wise, and the code for this is now available in a separate render branch. Right now we’re not entirely sure yet performance is good enough, though we may be close to about 1 hour / 2k frame on average. This is with irradiance cache, 2k rays per sample, low resolution geometry, one bounce, one/few lights and tricks to approximate bump mapping. These are the restrictions that we’ll probably work with.

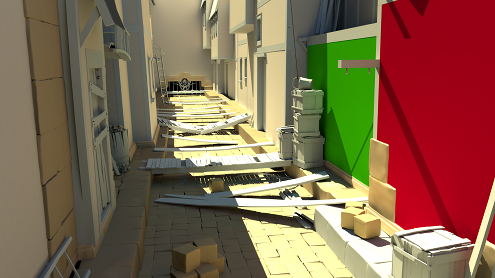

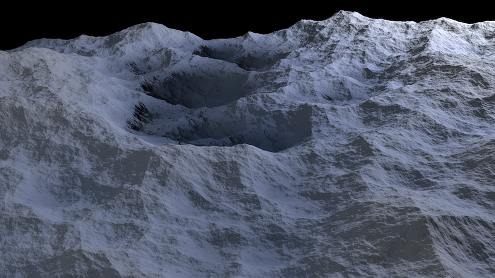

Indirect lighting test in alley scene, with sun and sky light

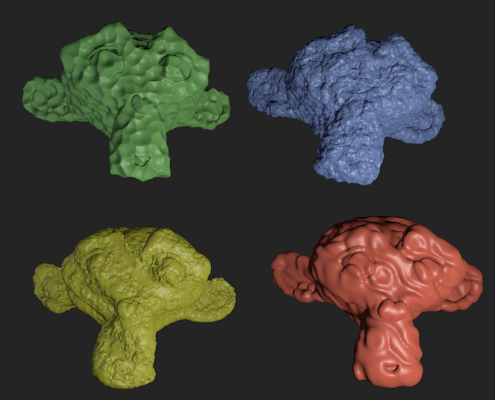

A prototype implementation of per tile subdivision is also there for testing now. It’s very early though, doesn’t work with shadows, texture coordinates, raytracing, etc, but it’s already possible to do some tests.

Displaced monkeys with per tile subdivision

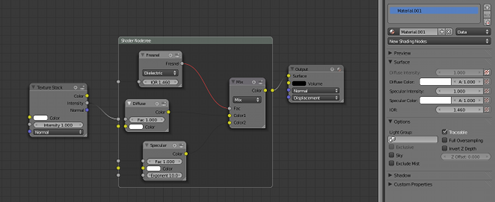

Regarding the shading system, I worked on node based shaders for a while, however it became clear that the benefits for durian wouldn’t be that big and that it was taking too much time. Being able to define materials as nodes more correct & flexible would be nice but also not help rendering better pictures much quicker, in fact converting our existing materials would take more time probably.

New shading nodes – you\’ll have to wait a bit longer for this

The code for this is not committed yet, and will probably have to wait until after Durian. However the core render engine code has been and is being further refactored so that we can easily plug in a different material systems, which is a big chunk of the work.

Image caching I haven’t really started on yet. I’m looking into using the OpenImageIO library, though it’s not clear yet if this would be a good decision, as it would introduce another image system in Blender and duplicate much of the existing code. Besides that images aren’t the only thing that would benefit from this, there are also for example deep shadow buffers which are not covered by OpenImageIO. Reusing code could save us a lot of time though.

Single plane subdivided with voronoi and clouds texture for displacement

Further plans are:

- Try to get indirect light rendering faster, make it work better with strands, bump mapping, and displacement.

- Work further on the per tile subdivision implementation to get it beyond a prototype.

- Find out if we can use OpenImageIO, and either use it or implement our own tiled/mipmap cache system.

Brecht.

March 9th, 2010 at 4:51 pm

oooo///cool !

good bump!

March 9th, 2010 at 4:59 pm

It all looks very cool, nice work

March 9th, 2010 at 5:00 pm

whao, this is very cool

March 9th, 2010 at 5:19 pm

Impressive!

What do you think of temporal irradiance caching like in this paper, as a method to speed-up animation rendering?

ftp://ftp.irisa.fr/techreports/2006/PI-1796.pdf

Not suited for HD rendering? how realistic would be its implementation?

regards

March 9th, 2010 at 5:20 pm

The shading nodes look awesome! To bad that we’ll have to wait until after Durian, fortunately that we’ll have a lot of other goodies to play with until then =P

March 9th, 2010 at 5:20 pm

Hey Brecht,

Speaking of IL, have you seen this “Cascaded Light Propagation Volumes for Real-Time Indirect Illumination” by CryTek ?

Video : http://www.youtube.com/watch?v=Pq39Xb7OdH8

Paper : http://www.crytek.com/fileadmin/user_upload/inside/presentations/2010_I3D/2010-I3D_CLPV.pdf

PowerPoint : http://www.crytek.com/fileadmin/user_upload/inside/presentations/2010_I3D/2010-I3D_CLPV.ppt

the comparaison with Mental Ray at the end of the video is quite impressive, but not perfect. maybe a similar method but a bit slower (more pass ? ) could be achieve for offline rendering ?

March 9th, 2010 at 5:21 pm

YAY 🙂

March 9th, 2010 at 5:24 pm

the autor’s homepage

http://gautron.pascal.free.fr/projects/trc/trc.htm

March 9th, 2010 at 5:38 pm

ruddy, from reading the paper I don’t trust this algorithm to give reliable results, and the videos to demonstrate the algorithm don’t convince me either. Further, inter frame optimizations are problematic for render farms as you can no longer render multiple frames at the same time, which can end up making things slower.

March 9th, 2010 at 5:42 pm

ok, thanks for taking the time to reply, and thanks again for all the amazing progresses 🙂

March 9th, 2010 at 5:42 pm

Looks like you have a hard work ahead. Thank you for keepin’ it up. To all the team.

March 9th, 2010 at 5:49 pm

This is all so cool, I cant wait to start playing with this stuff! thanks once again!

March 9th, 2010 at 6:07 pm

So much of this is stuff I don’t understand. I’m glad you are doing all of these difficult things. Blender is great.

March 9th, 2010 at 6:16 pm

I was playing with it yesterday evening. It looks very cool! In one moment I was slightly disappointed when image attached on big ground plane didn’t radiate image colors (pretty wild colors) to another object standing on it. Then I grasped, that it could be probably point based if using approximate gather and then I had a big joy when colors appeared after plane subdivision.

I love this feature very much!

And all the other features seems very cool and promising too!

Thanks and go ahead!

But be sure to take a rest sometimes! 😉

March 9th, 2010 at 6:22 pm

You’re the man, Brecht. I’ll have to come to Ansterdam again just so I can give you a high five. Awesome.

March 9th, 2010 at 6:22 pm

You’re the man, Brecht. I’ll have to come to Amsterdam again just so I can give you a high five. 😀 Micropolygons here we come. woooo!

March 9th, 2010 at 6:23 pm

Dude, you’re such a tease.

The *one* area of the blender codebase I actually understand and could help out with is the node system and the “code for this is not committed yet, and will probably have to wait until after Durian.”

Maybe you could throw up a patch somewhere?

March 9th, 2010 at 6:35 pm

Total Respect Brecht!

I really admire you for thinking of NEW WAYS to do things rather than going where everyone else has been before, this is what leads to innovation, the kind of innovation that benefits everyone.

Don’t hesitate to submit the code in a different branch so others can try it out, waiting after Durian would slow the process of the development as you probably want our feedback and bug testing, and trust me – most of us are more than eager to help out. After all – we’ve been groupies for years! 😉

March 9th, 2010 at 6:39 pm

Anonymous Coward: Ohh! The word of the man! Then I’ll become your fan! 🙂

March 9th, 2010 at 6:51 pm

thankyou verymuch!

March 9th, 2010 at 7:03 pm

Wow.. Brecth .. This is awesome progress . Thanks a lot for all ur hard work 🙂 .

March 9th, 2010 at 7:08 pm

I know that this project is for the blender software itself, but the the mission of the foundation is to promote opensource 3d software with blender at its core. I wonder if they looked in to using any external rendering engines. I feel like yafaray could do a very good job at 1 hour per 2k frame if radiance caching was added. Just something to think about.

March 9th, 2010 at 7:11 pm

thanks for the update!

wow, the alley scene looks really nice now. no annoying artifacts anymore…

March 9th, 2010 at 7:31 pm

Don’t know if this is the right place, but talking about refactoring the render engine, I find AO taking transparent materials into account rather important. It’s very anoying to have water or or glass on the ground which becomes dark grey, cause AO is calculated under it it as if it where solid. Anyway, my compliments to all you coders, blender is the most sophisticated piece of software I know. Thanks a lot! 🙂

March 9th, 2010 at 7:49 pm

Promising 🙂

March 9th, 2010 at 8:09 pm

Excellent Brecht!

March 9th, 2010 at 8:14 pm

ohh wow! Nice progress, Brecht! From that alley render I would say that you obliterated the black pixels artifact. The tile lines are almost gone as well. I see how challenging that is, because rendering in 1 tile has a significant performance cost.

Other renders look sweet too. And those nodes!? :O Some serious stuff to come. 🙂

Cheers!!

March 9th, 2010 at 8:16 pm

The idea of using the new luxrays library to utilize GPU vector processors for raytracing has been mentioned as a possible GSOC project, but maybe you might want to seriously consider the prospect in integrating it now. If the renderfarm machines have fairly recent GPUs in them, it would exponentially reduce the render times required. (working off the assumption that the majority of the render time in indirect lighting is spent in raytacing)

March 9th, 2010 at 8:32 pm

great work…amazing!!

any thoughts for GPU utilization somehow?

March 9th, 2010 at 8:35 pm

FishB8, even if e.g. raytracing was 70% of indirect light, and indirect light was 70% of total render time, you’d still only be able to halve the render time in the best case. For that we can better buy more CPU’s instead of adding GPU’s to the render farm.

loh, other rendering engines would be interesting to use, but it would need to be from the start, and e.g. yafaray would need major changes to handle hair and sss noise free, or handle displacement and more textures than fit in memory.

March 9th, 2010 at 8:54 pm

Brecht, thanks for all the hard work you are putting into the Blender Internal renderer, looks like it will be pretty slick for the Durian release 🙂

March 9th, 2010 at 9:46 pm

Awesome!!!!

March 9th, 2010 at 11:17 pm

Hi Brecht,

Have you heard of “Cascaded Light Propagation Volumes for Real-Time Indirect Illumination”.

They got some pretty good result, real-time is not really interesting here since we are looking for offline rendering, and it got some limitation which might be bypass with more iteration ? I don’t know, but still the comparaison with MR at the end looks pretty nice !

Video : http://www.youtube.com/watch?v=Pq39Xb7OdH8

Paper : http://www.crytek.com/fileadmin/user_upload/inside/presentations/2010_I3D/2010-I3D_CLPV.pdf

Slide : http://www.crytek.com/fileadmin/user_upload/inside/presentations/2010_I3D/2010-I3D_CLPV.ppt

anyway cool work

March 9th, 2010 at 11:42 pm

Quiestion: cannot find a good alternative word for googling: what is per-tile subdivision?

March 9th, 2010 at 11:42 pm

What about Pixar’s method of using PCA Basis functions for accelerated final gather? http://graphics.pixar.com/library/ShotRendering/paper.pdf

That would help with *noisy* GI animations, right?

March 10th, 2010 at 12:16 am

PS: is there going to be water in the bottom of the alley?

March 10th, 2010 at 12:33 am

The second render is absolutely awesome!!!

Good luck guys.

March 10th, 2010 at 12:40 am

About rendering time : do you plan to let the community help, using, for example, BOINC / BURP ?

BURP project has been quiet for a while, but maybe there are other network computing solutions of the same kind.

March 10th, 2010 at 1:04 am

Brecht, your work is AMAZING!

Are there Plans to improve Antialiasing for Durian? Ultra bad AA is the main reason why some professional Lighting/Rendering Guys don’t like Blender.

Coming from a Renderman Background I asked myself if it is theoretically possible to decouple shading from sampling with your new Micropolygon implementation?

March 10th, 2010 at 1:04 am

Quiestion: isnt mipmap cache system part of Deepshadhow branch ?

March 10th, 2010 at 1:06 am

yeah, BOINC/BURP SPRINT! I’m in!

March 10th, 2010 at 1:35 am

@brecht:

What if you create the irradiance cache with different resolution levels.

If I understand you use the irradiance cache only as a pre-pass filter. If the irradiance cache tends to “cost” too much calculation time, wouldn’t it be interesting to create it in different resolution levels?

E.g. you calculate the irradiance cache only from a 25% scaled image. The main edges should still be the same, and allow a good subdivision of the image for further processing.

Optional the user can set the resolution level of the irradiance cache higher (e.g. calculated from the 100% scaled image).

I know you spent much more time about it and considered many many options, but I’m curious person – so I ask my question this way, because it helps me to understand the “engine” of Blender and it’s technique better, if you confirm it or if you deny it and tell me my misunderstanding.

March 10th, 2010 at 1:38 am

A network rendering sprint would be great. I’d be in with 18 cores 😉

March 10th, 2010 at 2:24 am

fantastic

March 10th, 2010 at 3:05 am

It took me a bit of time while looking at the Alley scene image to figure out exactly what it was I was looking at, but I love the work! Love the edge deffinition it gives where the foot path meets the alley wall. How do you control the “bounce” level of the material? same as with RayMirror? Don’t suppose you couls spoil us a little by spliting the image up into a few passes so we can get a really good understanding of the effect on the image?

Cheerz!

March 10th, 2010 at 3:12 am

@brecht: I think you could reduce it by quite a bit more than half. There’s a lot more vector processors in a GPU than used in SSE SIMD. If you look at the test renders so far on the development tests of luxrays solutions, most of the increase in computation is anywhere between 4x to 10x. One was a speed up of 45x.

March 10th, 2010 at 3:32 am

Shame about the shading system. 🙁

Good luck with the rest of it, Brecht. Work hard. 😀

March 10th, 2010 at 4:34 am

Yes Performance is pathetic but its worth these results is it not?

March 10th, 2010 at 6:03 am

Wow, this looks so real ! Looks like a photo !

Can’t wait to see more renders !

March 10th, 2010 at 6:34 am

Would it be possible to make a new branch with your node materials. I’d love to play around with the code for that.

March 10th, 2010 at 11:32 am

Jupiter file cache

Saw this recently. Not sure if it could help with image caching or if you knew about it already.

http://code.google.com/p/jupiterfilecache/

March 10th, 2010 at 12:22 pm

@ MTracer:

I think so, and there is a grille as a hint at the back of the alley also… maybe a tribute to the very first open source movie in the world, does it sound familiar? 😉

http://d.imagehost.org/0213/ed_s.jpg

March 10th, 2010 at 12:25 pm

@ Brecht:

I’m almost speechless in front of the astonishing and incredibly quickly progressing improvements of the render departement in Blender.

That very alley render is the realization of a big big part of all the dreams of the blenderheads out there, GI coming true, and in the internal good old, and greatly improved of course, Blender raytracer!!!! 😀 🙂

March 10th, 2010 at 1:09 pm

I find this phenomenon interesting, somehow both the users and thus by extension the developers have overlooked the largest gaping hole in Blender as a production-ready tool. And that is 3d motion blur and depth of field.

I saw some posts for the GSoC 2010 ideas on Blenderartists, and it’s completely mind boggeling that only one person has posted 3d motionblur and 3d depth of filed (micropolygon or raytraced) as a proposed GSoC project.

I beleive the user base is a fault here, for not understanding the requirements of a production-worthy tool, and instead force the developers to focus on other flashy bells-and-whistles like GI, fluid simulations or sculpting.

I hope the Durian folks bring this up, saw it on the requests page but it didn’t have a priority icon.

March 10th, 2010 at 1:40 pm

Great work!

In the alley image, the walls painted red and green should emit much more color to the surrounding objects, the effect of color bleeding is barely noticeable

March 10th, 2010 at 1:42 pm

The alley render takes about 8 minutes on a quad core by the way, my estimation/hope of 1 hour a frame is for final renders with full detail, hair, textures, displacement, ..

@Irve, it’s automatic subdivision of the mesh for displacement, without using too much memory.

@MTracer: the method in that paper seems to be mutually exclusive with irradiance caching. Also, Pixar isn’t using this themselves as far as I know.

@Guybrush Threepwood, the irradiance cache already adapts itself to detail. There will be options to control the number of samples. Simply using the irradiance cache from a 25% render does not work well.

@FishB8: if it’s doing 50% raytracing you can only get a 2x speedup, no matter how fast the GPU.

@George Steel, I might commit it to the render branch commented out when I get the time.

@Jimmy Christensen, that’s only a small part of what we need, though could be useful for inspiration. But it looks like they haven’t uploaded the actual source code, only examples.

@loafie: every project has a different requirements, not many people render animations, that doesn’t make them wrong.

@Juan Romero: I don’t think so, most of the light there is coming from the sky.

March 10th, 2010 at 2:14 pm

Brecht: That’s true, but I would think they still want their favourite tool to be as complete and widely accepted as possible even their area is still images.

The DoF still applies here.

Congratulations on the fantastic work on the render branch so far by the way!

March 10th, 2010 at 2:21 pm

Brecht, some great progress, although I’m a bit disappointed development has to go in some hacky way again because of the time frame of the movie project, not the opposite..

I LOVE the approach of low res raytracing with displacement. If you consider there will be heavily used soft shadows, maybe the displacement doesn’t have to work with shadows at all?

Did you check the luxrays library allready? I think it could considerably speed up the the raytraycing part, especially with the approach of raytraycing low poly geometry(luxrays should be able to handle scenes up to 10 000 000 polygons). Also the library allready produces some really nice results and is now completely independent from luxrender.

this is link to the development branch:

http://src.luxrender.net/luxrays/

March 10th, 2010 at 2:35 pm

In the interest of not misrepresenting Blenders abilities, is per tile subdivision a form of micropolygons? It’s just when asked on other boards, you don’t want to make erroneous claims and then get accused of fan-boy-ism.

March 10th, 2010 at 3:25 pm

@ Juan Romero:

color bleeding is more than noticeable in that image and it is the way it should be, it works this way in the real world. Barely you could see your hand turning blu because you’re under a clean sky. Colors bleeding has to be delicate to not being ridicoulous.

@ Sleeper:

wait for Brecht official words, but I think I can say “oh sure!” it’s real micropolygons you have there 🙂 Answer in your boards and don’t be afraid to appear fanboy 😉

March 10th, 2010 at 3:30 pm

@Shinobi, you are not under any sky, you are inside it.

If you put a green saturated card like that wall close to your hand in direct sunlight (the card) you’ll see how much green spill light there is.

March 10th, 2010 at 3:45 pm

@vilda: already answered about using GPU in an earlier comment.

@Sleeper: micropolygons are usually associated with REYES rendering, which is a more specific way to do also shading and motion blur, which this doesn’t do. If you just define micropolygons as really small polygons then yes, that’s what you have here.

March 10th, 2010 at 4:25 pm

Juan, I think you severely overestimate the amount of light that spills from normal materials.

To illustrate, below is an image from LuxRender depicting a fully saturated green cube on a grayish floor under a somewhat late afternoon sun. Please keep in mind that LuxRender’s light calculations are PHYSICALLY BASED, meaning it takes no artistic license whatsoever, it depicts exactly what would be expected in real life.

http://i44.tinypic.com/bhyx42.jpg

I had to fully increase the saturation of the original render twice to be able to show the green spill on the floor. THIS IS WHAT REAL LIFE COLOR SPILL LOOKS LIKE. It’s subtle!

March 10th, 2010 at 6:10 pm

Very nice progress 😀

D: In an open scene, colour spill will be less noticable than in a closed one. Most of the rays there exit to the infinity of the sky…

However, even in an indoor scene which is heavily based towards colour spill, it remains rather subtle (but still way more noticable than in your example) – the Cornell Box in all its variations is the most famous example 🙂

March 10th, 2010 at 6:27 pm

Just go GPU´d pleaseeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeeee!!!!

March 10th, 2010 at 6:37 pm

@ Juan Romero:

if you don’t trust my words, please, trust your eyes. Open a color chooser of your choice and move the cursor over the different areas of the image to see their colors fade in the surrounding objects color.

Do you really think that crate should be more green than this?

http://d.imagehost.org/0210/cb.jpg

March 10th, 2010 at 6:40 pm

@D and Brecht:

I don’t know what you are trying to prove with that example, it has no resemblance whatsoever with the alley. The shadow sides of the cube, obviously are going to spill allmost no light. I’m referring to a dark wall (I mean in shadow) parallel to a very bright one (under direct sunlight) and painted with highly saturated colors.

Any proffesional photographer can confirm this, I don’t know to exactly what amount of color bleed, but sure is more than in the alley image. First time I saw it I didn’t notice any red spill on objects nearest to the red wall

March 10th, 2010 at 6:42 pm

sorry, my comment was for D only!

March 10th, 2010 at 6:50 pm

@Shinobi:

Yes I think it should be more green, given the color of the crate is white.

I’ll Try to upload a photograph (no cg will be the same even the most physically correct renderer) of what I mean when I get home.

Regards to all of you guys, and Brecht, congratolations for your work!

March 10th, 2010 at 7:19 pm

@ Juan romero:

I do really think not, unless you want obtain a patchwork.

Just in case, in your projects you can simply increase this value:

http://d.imagehost.org/0521/factor.jpg

March 10th, 2010 at 7:22 pm

@Shinobi, D, Juan Romero…:

I’m sure that the amount of reflected light highly depends on reflectivity characteristic of lit material. Your discussion miss that point. 😉

March 10th, 2010 at 8:14 pm

Great work!

Indirect lighting is one of the features I have been missing most in blender. Doing visualization work this makes a lot of difference. I used to add a bunch of small lamps in my scenes to avoid black shadows. AO can help in some situations but often gives a dirty look instead of the clean look from indirect lighting. Any indirect lighting solution in blender is a huge step forward. Most of us make more images than animations anyway and render time is not the biggest concern. I’m confident though that we will end up with a good and fast solution.

Actually.. I handed over a few concept sketches of a product to a client yesterday rendered with the new IL feature. So I guess we can say it has already been used professionally.

March 10th, 2010 at 8:39 pm

@francois, I’ve seen the paper, but it only does low frequency effects, and seems like it would suffer from flickering in some cases too.

Further, for anyone who has suggestions for different algorithms, I appreciate the suggestions but I’ve seen pretty much all the important papers published about this in the last 10 years, and it’s very unlikely that we’ll switch to something else or do GPU acceleration or whatever, there is simply no time for that.

March 10th, 2010 at 9:03 pm

This is great stuff. Excellent work.

What papers did you follow for tile based subdivision, if I may ask?

March 10th, 2010 at 9:04 pm

You’re doing a kick-arse job, Brecht, keep it up 🙂

March 10th, 2010 at 9:31 pm

Talking about external renderers – I know this project is death but Pixie has all the features you mentioned and since it is opensource

http://www.renderpixie.com/

March 10th, 2010 at 10:36 pm

@brecht: I see what you’re saying. I thought you were talking about time spent raytracing rather than time spent on the complete rendering process.

March 11th, 2010 at 12:48 am

@brecht:

Thank you very much to take time to answer each person’s question.

Please do not hesitate to continue posting now, because so many asked questions.

Even it takes time to answer sometimes silly questions, it gives us (the community) great respect to hear your opinion to our simple questions.

March 11th, 2010 at 12:23 pm

@ 4museman:

you’re completely right, I agree with your point here. That’s a wall, so I don’t know how much it can spills colors, but more important is how to translate it in Blender.

I know the Emit value influences colors spill, I don’t know if there is another parameter for that to take into account for indirect lighting computation.

March 11th, 2010 at 3:12 pm

@Shinobi:

I think the most probable parameter for this is Diffuse Intensity. But of course, it change the material visually as well, which is only natural. From my quick test in Blender it really affects the indirect lighting.

But theoretically, the light reflection should be affected also by the surface roughness (smoothness), which results in diffuse of the light. In Blender it is probably Specular Hardness parameter that is closest to that characteristic (?). Or maybe it could be controled by different Diffuse Shader Models.

But I’m not sure how much it could be of the difference, while recent the only method for IL is point based (Approximate) not raytraced.

What is the viewpoint of the competent person, Brecht?

March 11th, 2010 at 11:07 pm

Interesting what you’re saying but has it effectively coded in Blender IL yet? I mean are Diffuse Intensity and Specular Hardness influencing color bleeding yet? Or is Emit value only being taken in account in computation…?

For what I understood IL in Blender now is a the level of raytracer, so it is not Approximated, or not only. Approximated approach was before Brecht coded raytracing IL.

March 12th, 2010 at 5:47 am

I hope that the parameters aren’t linked Permanently! I’m an engineer and have to use Inventor to do renders of my work for the sales guys. They ooh and ahh over it and I go out of my mind because they’re crap! In Inventor the controls for Specularity and Reflectivity are linked and the only way that I can make something shinny, like plastic pipes which I have to do alot, is to give them a mirror finish! Please don’t link them just because they should be related to each other, some of us might have other ideas!

March 12th, 2010 at 11:46 am

@Shinobi:

According to my (rather quick) tests, the reflected light color and strength is taken from resulting color in specific vertex of the model (after all changes to color values are applied, such as Diffuse Intensity etc.).

Emit value is not related to light rebounce, but it is the amount of light the surface is actively emitting.

My remarking of Specular Hardness and Diffuse Shader Model parameters are meant more or less as a question. That it wound be a good idea. Maybe. 🙂 I’d love to hear opinion from Brecht. Is that thought a bullshit?

And as I understood so far, the current working IL approach in Blender (at least in SVN trunk) is just Approximated. Which is someway reduced version of raytracing followed by approximation of the results between vertices.

March 13th, 2010 at 3:44 pm

Many congrats, Bretch!

Outstanding work.

March 13th, 2010 at 9:53 pm

Brecht,

fantastic work!

Will you be integrating functionality in indiredt lighing that bounces the texture rather than the diffuse color? It would be great to have.

Thanks.

March 13th, 2010 at 11:33 pm

*BUMP*

I felt I needed to bump this thread and I hope that it is still read.

@ Brecht:

I know you’ve said that you’ve explored many different papers and solutions already, so I apologize in advance when I point you to this thread:

http://blenderartists.org/forum/showthread.php?t=93304

I’ll be posting in Blender artist too just in case this one goes unnoticed.

March 15th, 2010 at 11:08 pm

This kind of work blow my mind brecht, compiling and running svn is such a joy because of all the advances I see each time,

Continue doing your thing man, and don’t forget to enjoy it 🙂

March 17th, 2010 at 1:04 am

In what build is the GI? It doesn’t seem to be in any of the latest builds on graphicall…

March 17th, 2010 at 3:47 pm

it’s in a branch, there’s no GI yet

March 19th, 2010 at 4:49 pm

render25 branch, not in trunk yet

March 19th, 2010 at 5:05 pm

“Will you be integrating functionality in indiredt lighing that bounces the texture rather than the diffuse color? It would be great to have.”

Definitely, it should be a must have feature, it was really great!

We could avoid things like this:

http://d.imagehost.org/0223/gi.png

What do you think Brecht?

March 20th, 2010 at 11:00 pm

Hi Brecht.

I came across this paper.. Maybe it would be useful.

Temporally Coherent Irradiance Caching for High Quality Animation Rendering

http://www.mpi-inf.mpg.de/resources/anim/EG05/

March 20th, 2010 at 11:13 pm

One more..

An Approximate Global Illumination System for Computer Generated Films

http://www.tabellion.org/et/paper/index.html

Abstract:

Lighting models used in the production of computer generated feature animation have to be flexible, easy to control, and efficient to compute. Global illumination techniques do not lend themselves easily to flexibility, ease of use, or speed, and have remained out of reach thus far for the vast majority of images generated in this context. This paper describes the implementation and integration of indirect illumination within a feature animation production renderer. For efficiency reasons, we choose to partially solve the rendering equation. We explain how this compromise allows us to speed-up final gathering calculations and reduce noise. We describe an efficient ray tracing strategy and its integration with a micro-polygon based scan line renderer supporting displacement mapping and programmable shaders. We combine a modified irradiance gradient caching technique with an approximate lighting model that enhances caching coherence and provides good scalability to render complex scenes into high-resolution images suitable for film. We describe the tools that are made available to the artists to control indirect lighting in final renders. We show that our approach provides an efficient solution, easy to art direct, that allows animators to enhance considerably the quality of images generated for a large category of production work.

April 29th, 2010 at 10:02 pm

To answer the couple of BURP related posts here. We’re just in the final stages of getting the Blender 2.5A2 clients out for Win/Linux/Mac for the Renderfarm.fi publicly distributed rendering project.

As far as I have understood, the main issue with using BURP for rendering a scene in Sintel is the fact that the scenes require massive amounts of memory.

We’re also testing 64bit builds of the clients, which might just imply (in the future) that we could move onwards from the current 1,5GB scene memory requirement limit that we must enforce. Unfortunately I think that our efforts on making the service more accessible and stable will not be on time for the final renders of Durian/Sintel. Perhaps for the next open movie project though? 🙂

– Julius Tuomisto / Renderfarm.fi