Sculpting Development

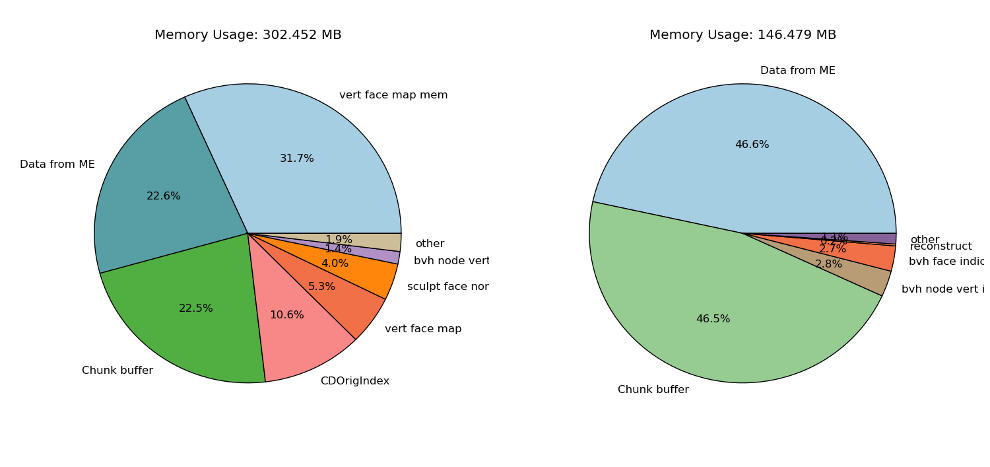

on November 16th, 2009, by BrechtFor the past two weeks I’ve been working on sculpting full time, which I’m quite happy about, it’s been a year since I’ve been able to work focused on actual 3D computer graphics related code for that amount of time, rather than the Blender 2.5 project which is mostly about user interface. The development is being done in a separate sculpt branch, and it’s not stable yet, though test builds have appeared on graphicall.org.

I continued the work done by Nicholas Bishop to speed up sculpting. We started discussing design about two months ago and decided on using a coarse bounding volume hierarchy (BVH), after which Nicholas implemented it. What this does is split up the mesh into nodes, and organize them in a tree structure. Each node has about 10000 faces, this is much coarser than a typical BVH used for raytracing or collision detection. We wanted the structure to have very low memory overhead and be relatively quick to build too.

The BVH is central to various optimization that were done, we use it for:

- Raycasting into the BVH to find the sculpt stroke hit point.

- Recomputing normals only for changed nodes.

- Creating more compact OpenGL vertex buffers per node.

- Redrawing only nodes inside the viewing frustum.

- Multithreaded computations by distributing nodes across threads.

- Storing only changed nodes in the Undo buffer.

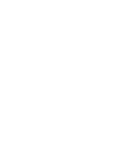

Beyond that, sculpt mode memory usage has been reduced in other places as well, approximately halving it in total, with some additional gains in the undo buffer which are a more difficult to quantify. Sculpting performance has improved too, though it is difficult to compare it with 2.4x, as strokes are not spaced the same way. Where the BVH clearly shines is when you work on only a smaller part of a full high resolution model, in that case only nearby vertices need to be taken into account which is much faster. Drawing performance is considerably faster as long as vertex buffers fit in GPU memory (or are supported at all).

The next step that I’m working on is improving multires to better take advantage of this system. That’s turning out to be more tricky than the initial work I did, but it’s starting to get somewhere. Multires has the potential to be more memory friendly than regular meshes, but it requires more work to get it even on the same level, and then some more to get it more memory efficient. Performance is an issue as well, since it requires very high resolution subdivision surface computations, and applying and recomputing displacements.

We’re looking into on-demand loading of multires displacements, either from the .blend file or an external file. This would mean that you only need to have the displacements in memory when you are actually sculpting (in sculpt mode), and not when e.g. animating. It’s tricky though as there isn’t really a good precedent for this in Blender, so we’re looking into different ways to implement it.

Brecht.

November 16th, 2009 at 9:23 pm

Absolutely amazing. Maybe this will get me to start sculpting more often… Great work guys. I really appreciate it.

November 16th, 2009 at 9:24 pm

So cool ! But I want the code !

November 16th, 2009 at 9:28 pm

I’ve been testing every sculpt build that comes up on graphicall and I cant believe what you’ve done with it. It’s amazing the improvements you’ve made in such a short time. I was never very impressed by Blenders sculpt mode due to it’s inability to handle high poly counts, but on the first release I was able to get about 3 or 4 mil. Keep it up mate.

November 16th, 2009 at 9:52 pm

These improvements will certainly make me more apt to use sculpt in Blender, thanks for the work in this area. Whatever Brecht puts his mind to he can do!

November 16th, 2009 at 9:58 pm

Very exciting work !

Is it only related to the use of multires modifier or could it be used to increase standard fluid simulation’s resolution ?

November 16th, 2009 at 10:05 pm

great work thus far. sounds like quite the undertaking, but the benefits it’s bringing to Blender look to expand its user-base and the abilities of Blender for production environments(oh yeah, Durian too ;-). Thanks for your hard work.

November 16th, 2009 at 10:15 pm

the last 7 lines are so clever and inspiring!

November 16th, 2009 at 10:29 pm

Fantastic! I’ve been limited with sculpting before due to the performance, this is so cool and so important! (we are not worthy!)

The storage/visibility – there’s a lot of new stuff going in external files, which is great for simbake, but I like the all-together .blend bundle, and for one char model it seems to me that the multires should be part of that. Still, interesting that, now I think about it, I almost never actually want to see sculpted data in the viewport – one need only know it’s there for render or bake, and it only needs to be visible when sculpting or when manually shown.

Could visibility/renderability be toggled for a multires modifier like objects in outliner? Then would it be possible to skip it during loading, unless that visibility was on? Ah, what do I know? Just really excited about the great work you’re doing!

All the best!

November 16th, 2009 at 10:38 pm

Wot! impressive work! Gonna grab a test build and play around with it

November 16th, 2009 at 10:51 pm

Looking great! Blender is improving more and more everyday with improvements from all fields, from rendering to UI to sculpting. I just hope this new improvement will be included in the 2.5x range when it comes out. Also is anyone working on a node based mesh system for other things such as rigid body physics?

In any case keep up the great work!

November 16th, 2009 at 9:53 pm

That’s seriously impressive! Nice work!

November 16th, 2009 at 11:09 pm

Good lord….

You can’t imagine how important this is and how exciting is this development. I’m gald that such a talented dev joined to the sculpting dev efforts.

Already test some builds and feels like never before in Blender, sooo clay.

Memory and speed… I never thought Blender could get this far for sculpting. everybody talking about software tricks for Zbrush, so I thought hardware and Blender’s 3D viewport complexity couldn’t handle that kind of accelleration.

But each step you and Nicholas have made in the last weeks makes me very hopeful about Blender sculpting tools.

And now…. on.demand multires displacement!… 8.000.000 polys -> 300MB!… the hidden potential of multires to use even less memory…

Just too much.. and I love it!

Thanks for all the efforts on this side of Blender!

November 16th, 2009 at 11:17 pm

Only one word… wow!

November 16th, 2009 at 11:20 pm

Really wainting for these news so exciting about sculpting feature.

I hope the micropoligon rendering task will be developed soon after finishing the scultping mode.

Thanks a lot, guys!

November 16th, 2009 at 11:29 pm

Hey this is great news!!!

I always loved the Sculptool in Blender! With this improvements it is much more fun to work with!!

Keep us up to date!!!

May we hope to get Sculptlayers in Blender too? This would be great!!!

Anyway!! You are doing an awsome job there!!!! Goold luck!

November 17th, 2009 at 12:30 am

Impressive, most impressive!

November 17th, 2009 at 1:17 am

Eight million polygons in not even 300 Megabytes?

Damn.. and I was thinking the current code in the sculpt branch already kicked some ass.. so, it’s going to be even much better than that?

Woah. Woah. Woah.

Where’s that Brecht fan club again? I wanna sign up!

November 17th, 2009 at 1:21 am

I can’t wait for the movie, It looks amazing so far. Even more so, CXan’t wait for 2.5. YEAH!!!

November 17th, 2009 at 2:00 am

Here and there i read about plans of going micropoly with the internal renderer. Is that true?

November 17th, 2009 at 2:47 am

Way to go, Brecht!

November 17th, 2009 at 2:48 am

@lsccp, not necessarily micropolygons (as used by renderman & reyes rendering engines), but being able to load in high poly geometry and free it once the render tile is finished will probably be needed for durian.

November 17th, 2009 at 3:46 am

I think we should have the ability to sculpt directly into normal or displacement maps. They could be added as an option to multires.

Basically, that would be for computers that are too slow to sculpt at full res, on models that would be reduced to maps anyway.

November 17th, 2009 at 4:22 am

It’s all… magic.

November 17th, 2009 at 5:47 am

Micropoly feature will be cool…I dont know but for example Vray doesn’t mind about polycount, it minds just shader, number obj and rendering setting!

this is cool becauseYou can render a LOT of polygons

great work!

November 17th, 2009 at 7:05 am

Interesting. The current version (on Windows) crashes trying to subdivide the cube to greater than about 1.6 million polygons (virtual memory blows out beyond 2Gb); but at 1.6 million faces, the performance is incredible and memory is only around 200Mb.

Looking forward to the next update!

November 17th, 2009 at 7:11 am

Loving it!

November 17th, 2009 at 7:23 am

Bravo!!!!

You are great!!!

thanks for all the hard work!

November 17th, 2009 at 7:35 am

wow it is interesting

I think it can to make High Detail Object on Low poly object Better

November 17th, 2009 at 8:27 am

I still in a state of utter shock and disbelief. 8 million polys and under 300MB of ram used that is truely gobsmacking amazing I shudder to think what you will think of next.

November 17th, 2009 at 10:03 am

Awesome!

I think this will be on of the most important feature of the new blender! Whit superb sculpting B. can achive great popularity!

thx

November 17th, 2009 at 11:31 am

Thats simply Amazing!!

November 17th, 2009 at 12:41 pm

Very exciting news Brecht! Kudos to yourself and Nicholas for undertaking such a unique project. This potential of this sort of workflow is way beyond what the commercial apps are offering. Will be great to rid of the endless back and forth workflow between ZB and 3d app….GOZ is great but this is a different kettle of fish again

An idea that comes to mind, is the option of having a normal map projected on the fly onto your multires model , when you are on the lower levels. If you are on say level 1 or 2 , then the on-the-fly normal map (generating normals which are the difference between your current level and the top level) will give a closer approximation of the model when animating in the GLSL viewport.

Would save having to crank up multi res levels when animating many multires characters in the same scene…

November 17th, 2009 at 1:04 pm

Congrats to you and nicholas brecht.

Although allowing the internal renderer to make direct usage of the hi res mesh for rendering is good, I suspect that there are probably reasons we will still want to have a good baking to displacement/normalmap workflow.

1) Weight painting might be a major challenge with a high res mesh

2) How does uvmap and other data increase the size of the ‘mesh’ data?

3) Changing a low res object topology and rebaking is probably easier than the editing of the multires mesh and keeping the high detail mesh to keep the same form with the new topology

4) Integration with external renderers will likely be far more headache inducing, and probably not possible in most cases

5) Integration with external pipelines in general will be much harder without baking to displacement map.

November 17th, 2009 at 2:01 pm

acro, I dislike adding external files as well, but in practice I think it will be necessary. Think of Sintel and all her accessories having sculpted details, and saving that every time you save that .blend file, it will be a huge file making saving quite slow, even when you’re doing rigging for example. One way to think of this is as a texture file, so we could support packed and non-packed displacements in a similar way.

Regarding visibility toggle, we intend to add a sculpt mode subdivision level for multires next to viewport and render level.

MTracer, normal map painting might be added. Sculpting directly into a displacement map is not something that I think would work on slower computers, in fact I think that is the kind of optimization that would only work on very fast ones, as displacing things on the fly for display at these resolutions is relatively slow even on the more expensive graphics cards at this point.

Max Puliero, VRay is limited in polycount, it just does better adaptive subdivision so you don’t notice it as quickly.

B.Tolputt, I’m hoping the new multires based code will work better on Windows, the memory manager there deals poorly which large memory chunks, so I’m trying to avoid them.

Jamez, I’ve considered that kind of thing, but it seems unlikely the effort to implement that is worth it for this project, better viewport preview of displacement while animating or setting up shots is nice, but unlikely to help much in terms of productivity.

LetterRip, 1) and 2) are non-issues, weight painting and uv mapping is still done on the low resolution model. 3) is a valid point but rebaking can be made to work into displacements. Regarding 4) and 5), we already have displacement map baking, and it can be improved, but this project is not about integration with external applications, so we’re not going to spend time on that.

November 17th, 2009 at 3:52 pm

Would it be possible to make a direct conversion from¨tangent space displacement values¨ to a displacement map?? Is that what you mean by ¨no extra manual steps by user¨?

That way we wouldn´t need to make a model to model baking.

Everytime I have to do that with a high poly character it takes forever…

The branch is looking great!!!

November 17th, 2009 at 4:13 pm

“displacing things on the fly for display at these resolutions is relatively slow even on the more expensive graphics cards at this point.”

Even though it would be a great feature IMO, this would require vertex texture fetches through vertex shaders to be any effective. If done CPU-side it will be a killer.

November 17th, 2009 at 5:12 pm

I didn´t mean that this conversion should be done in real time for displaying in the viewport. It would be great to have it as the ¨make displacement map¨ button.

November 17th, 2009 at 6:16 pm

@Brecht: my ABSOLUTE hero. Side note: will it, at some point, be possible to incorporate the Wacom Intuos 4 drivers somehow? This board has so much extra functionality and practically none works in Blender/Blender Sculpt.

And I really, in fact, just came from the ZBrush website looking at the cost. 400€ isn’t that bad, but after all I wanna stick with Blender and it sounds like this will work! Sculpting seems to become very important, so what you do for everybody makes you one of the Blender High Priests!

@all: I personally think there should be something like a training DVD available for Blender sculpting once it has been refined. I know I’m supposed to “play around”, but my comp isn’t really that responsive with sculpt, so playing is no fun at all. Just like the paintbrush sometimes is – SLOOOOOW (while I can paint 600dpi im Photoshop without probs). Maybe someone can put something together? There are a few good Blender sculptors I know about, but they don’t have TIME… or don’t want their voices on Video

November 17th, 2009 at 6:53 pm

Panoramic Skies:

http://www.blendercookie.com/2009/11/01/textures-panoramic-skies/

licensed as CC Attribution 3.0

November 17th, 2009 at 8:25 pm

Great work as always Brecht !

Will be possible to use tiff image file(32 bit for channel)for the displacement data?

As you are working on it I hope detailed displacement maps can be rendered even if they come from outside(it’s not clear to me how you are going to store the displacement data but if this could be converted TO and FROM image file it will be a really good thing,I’m thinking about a workflow with different programs(for example displacement maps made in Zbrush and renderer in Blender)

Bye

November 17th, 2009 at 8:48 pm

Its been my observation displacements are usually done by the texture artist, and the base mesh done by someone else. So splitting displacement/base mesh into separate files is definitely more studio friendly.

I also applaud your efforts to add the feature, despite the lack of any ‘precedent’ application to base it on. Blender is blazing its own trail!

November 17th, 2009 at 9:05 pm

What about the memory consumption when subdividing? Normally what makes blender max out and crash because of maxing out the ram usage on 32 bit.

here’s my graph for blender when subdividing a cube, little peak is 400.000 to 1.6 million polys and big peak is from 1.6 million where it peaks out and crashes.

http://www.pasteall.org/pic/196

here’s the graph for zbrush

first peak is 500.000 to 2 million and second is 2 million to 8 million

http://www.pasteall.org/pic/197

max usage was 1.3gb ram for zbrush

November 17th, 2009 at 11:19 pm

Yeah, the memory temporarily doubles when subdividing using the multires modifier, making it impossible to use all of the available working memory for sculpting. If that’s going to be fixed with this update as well.. well, you really will be a hero.

November 18th, 2009 at 1:34 am

Maybe you can add . layers to put more definition and differents textures like Zbrush and something like Subtools , and a lazy mouse :).that would be great .

You SHOULD work more ):( .

November 18th, 2009 at 4:21 am

awesome 8 mill polys and only 300megs on the mem clock:)

i love the scultping tool so i m really happy to see its going to be improved too!

thanks so much for the improvements that u and the other devs are trying to get implemented!!!

best regards!

November 18th, 2009 at 7:14 am

Impressive memory savings already. Nice one : )

November 19th, 2009 at 7:09 am

Phwoar…

November 21st, 2009 at 12:39 am

Brecht, you do great things!