Render Development Plans

on January 13th, 2010, by BrechtI’ve moved to working on the rendering engine, which will be my main focus in the next 3 months. There’s still some fixing needed on the sculpt tools but I won’t do any big changes anymore there. The planned rendering development consists of four parts.

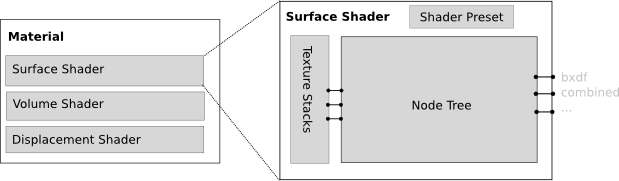

Shading System

The shading code will be refactored to make a clean separation between materials and lamps, and some corrections will be made to the current lighting calculations. But mostly the intention is a more modern system to design materials, one that is not so much geared to direct lighting from lamps only, but works well also for indirect light. Nodes will also be central to the way materials work rather than something glued on top of it. It’s basically a merger between physically based rendering materials that are design for advanced lighting algorithms, and production rendering materials that can do things like output passes or use some tricks for speed.

The current design is on the wiki. We most likely won’t implement the full thing for Durian, but the intention is to implement the foundation and the parts that we use ourselves. Improved raytracing can then be implemented by others later.

Further people have been asking about OpenCL for GPU acceleration. That is something we’re not planning, it wouldn’t be even remotely possible given the time constraints. Also the recently released Open Shading Language by Sony Imageworks would be good to have, but a shading language is not something we can spend time on now, though I think what they are doing is in the same spirit, bringing together physically based and production rendering. I’m looking at their design to see how compatible we can be so someone can implement support for this later, but it looks quite similar, the big difference being of course that we are building a node system and they’re making a shading language.

Indirect Diffuse Light

There’s already an incomplete implementation in trunk based on the approximate AO algorithm. We’ll try to extend this method to do proper shadowing. This could be done using either the recent micro-rendering algorithm (a bit simpler and more flexible) or the pixar technique (proven to work). The main challenge here is keeping performance high enough, it is expected to be quite a bit slower, but hopefully still faster than raytracing, and working on scenes that don’t fit in memory.

Disk Caching

Memory usage is a big problem, especially when rendering in 4K. The plan is that many data structures in the rendering engine will be cached to disk and loaded only when needed. The main implementation issues here are how to do this threaded efficiently and trying to avoid latency killing render performance. There’s many things that could be cached to disk, hopefully we can implement it for most of these:

- Image textures

- Shadow maps

- Multires Displacements

- Smoke/Voxel data

- SSS tree

- Point Based Occlusion/GI tree

Per Tile Subdivision

This is probably the most complex one, we want to subdivide meshrd per tile to render very finely displaced meshes. One challenge is that this requires a patch based subdivision surface algorithm that does not need the full mesh in memory. The existing subdivision library could be modified to do this, but it would not be very efficient. Another possibility is to integrate the integrate QDune Catmull-Clark code.

Other problems are grid cracks, though perhaps these are not too difficult to solve if we take them into account from the start. Another concern is the filtering of multires displacements, this is quite a complicated problem, if it doesn’t get solved we’ll need a simple workflow for baking multires to displacements maps. Existing displacement code also needs to be improved to do filtering properly.

Other issues are how to deal with threading, sharing diced patches between threads, and distributing objects/patches across tiles efficiently. Will be a fun challenge :).

For Interested Developers

Of course these are just plans, we’ll see how far we get, though I hope we can do all of them. If you’re a developer interested in helping out, the disk caching would be a good project to pick up as it doesn’t involve that much knowledge of the render engine. Also approximate indirect diffuse lighting could be a good thing to help on, as most of the data structures are already there, the point cloud is already built, it is mostly a matter of implementing the rasterization.

Brecht.

January 13th, 2010 at 10:19 pm

woa!

January 13th, 2010 at 10:32 pm

cool. weldone Durian.

January 13th, 2010 at 10:46 pm

Very interesting and exciting! New toys!

January 13th, 2010 at 11:35 pm

Keep on doing this great work!!! We are by your side!!!

And also thanks for updating, i love to follow your progres/plans!!

January 13th, 2010 at 11:37 pm

wow Sounds like a huge troublesome mutation. Iirc, rendering is one area that you would like to work on deeper. So.. good luck, Brecht 🙂

January 13th, 2010 at 11:58 pm

O.o I cant find may jaw!

January 14th, 2010 at 12:42 am

“I’ve moved to working on the rendering engine”

Erm, you didn’t sign it–it would be nice to know who you are 😛

🙂

January 14th, 2010 at 12:49 am

sounds pretty good, should make some big changes 🙂

too bad for OCL though :'( :'(, does that mean it will never be, or that you won’t have time in those 3 months.

About shader and material, do you plan to make it easier to load written glsl in material instead of python bidding. so we could write glsl or re-use even for rendering ? if it could work like in 3ds max and hlsl would be very great, and easier to use external glsl. python binding is a bit of a pain a limited to BGE 🙁

January 14th, 2010 at 12:59 am

Brecht please dont stop the scuptling development.

Please!!!

There is a scuplting hype nowadays in the blenderartist forum:

Look in the blender-tests section, you will make big eyes!!!

I think the sculpting development is near to be finished. So please dont stop the development.

Please Brecht!

January 14th, 2010 at 2:35 am

we are seeing a stable release in 2011?,because this sound a lot!!so we are going to be messing around with Beta version instead.

January 14th, 2010 at 3:07 am

OH MY GOD!

January 14th, 2010 at 3:12 am

This are the greatest news I could hope for!

Go Brecht go! I blindly trust you, and thinking of your talent at work on a single area for 3 months makes me really happy!

(it’s just too bad that February has only 28 days…)

January 14th, 2010 at 3:47 am

Brecth . one small request which i have been wanting for a long time . I have had problems when creating a forest like scene . the huge number of poligons crashes blender . This problem can be solved by a proxy system . ie. we can place low poly models which are acting as a placeholder for a high poly object which might be residing in a diffrent blend file (so we can also make libraries :)). the renderer replaces the low poly ones with the high poly ones only while renderring . this can help a lot in huge scenes and also might help a lot in durian for rendering large scnenes with many mesh objects .

an example is Vray proxies . http://vray.us/vray_documentation/vray_proxy.shtml .

I think this is the right time to get this thing done since u are refactoring some part of the renderer .

I would love to see this in blender .. a small feature yet very powerfull one 😀 . think bout it 😉 .

January 14th, 2010 at 6:30 am

This is exciting stuff! 😀 Good job and good luck!

I vote to implement both indirect diffuse lighting techniques, but starting with the micro-rendering one first. 😛

January 14th, 2010 at 6:54 am

dont know erymuch about shaders but I think that a revision would be nice 😀

January 14th, 2010 at 8:21 am

Really an interesting post. I have one small question/request, will these updates allow for rendering sss as a separate pass? I have seen this down with other programs which allows for some interesting work with compositing.

January 14th, 2010 at 10:52 am

Very awesome post Brecht, keep is informed like this 😀

cheers

January 14th, 2010 at 11:51 am

Amazing!!! Any chance of creating a node->OpenCL/GLSL/OSL snippet generator? Dumb question?

Looking forward to amazing things! Great job!

January 14th, 2010 at 11:55 am

Wow!! Looks really cool! Good luck getting everything together.. =) I’m very thankful for the work you do..

-c-

January 14th, 2010 at 1:59 pm

Blender is not going to be in Kansas anymore !!! We are going to have the best app ever.

January 14th, 2010 at 2:25 pm

Looking forward to see how things will work out!

hmm with all the disk caching a SSD on sata 600 or usb3 would be nice to have…

January 14th, 2010 at 5:24 pm

“””This could be done using either the recent micro-rendering algorithm (a bit simpler and more flexible) or the pixar technique (proven to work).”””

sounds awesome! i think i even would prefer something like that over renderers like octane in most cases.

January 14th, 2010 at 5:53 pm

Sounds good.

One minor quibble with the blog – could you get someone to set it so that the author name is on the articles?

When you’re using terms like “I”, “me” etc. it’s nice to know who they refer to 😉

January 14th, 2010 at 7:19 pm

sbn..: I was thinking the same thing. Who is that “I”. Sometimes there is a name of the poster in the bottom of the message but sometimes not even that.

Names more visible please

January 14th, 2010 at 10:51 pm

sounds like a bunch of great developments to come 😀

January 14th, 2010 at 10:59 pm

Amazing! I truly truly hope that most of the mentioned improvements will be implemented. The render engine is the most important part of 3D software. It will make a major difference for Blender!

January 14th, 2010 at 11:08 pm

Long long time ago, during O.T. rendering days, I had a persistent problem when there were small faces in the scene (really small that after subdivided get even smaller) and consisted in some flashy flickering tiny white dots (way bigger then the faces that originated them) appearing in the animation.

A year and something later I rendered another animation thing and the same issue still was present.

I suppose that the event only shows in low resolution renderings, because I don’t spot them in BBB or ED… but anybody knows if this is a known issue?

January 15th, 2010 at 12:53 pm

“This could be done using either the recent micro-rendering algorithm (a bit simpler and more flexible) or the pixar technique (proven to work).”

Brecht, or Matt, who know… 🙂

if that can be fully implemented, it will answer to more than 90% of the Blender Community wishes and hopes from many years to now.

This is a core-feature Blender can miss no more.

All my encouragement for you and your hard work so far, and your promising plans!

January 15th, 2010 at 6:41 pm

pretty cool 😀 most of it i don’t understand just yet, (:-D) but a better Render engine is good 😀

January 15th, 2010 at 6:44 pm

@shrinidhi

Developers already made this feature available in “blender 2.5 Alpha 0” release.

look for Instancing in the following page:

Blender2.5 Alpha 0 ray tracing optimization

http://www.blender.org/development/release-logs/blender-250/ray-tracing-optimization

Blender 2.5 Alpha 0 new features

http://www.blender.org/development/release-logs/blender-250

January 15th, 2010 at 8:38 pm

Off topic…

2.5 – BGE – Sensor/Mouse/Pulse ON/LBM – jammed, though I release LBM… :-(((. The gun does’t stop shooting…??? Why, oh why?!?!? A bug or what?

January 16th, 2010 at 12:00 am

Speaking about point based AO and GI, maybe this paper http://graphics.cs.williams.edu/papers/AOVTR09/

will be very interesting for render engine developers.

January 16th, 2010 at 12:07 am

Also the Screen Space Photon Mapping technique can be very useful for accelerating the GI rendering speeds.

See the paper here: http://graphics.cs.williams.edu/papers/PhotonHPG09/

January 16th, 2010 at 1:50 am

@Brecht: does this mean that you’re also going to improve the preview system in Blender, let’s say with a progressive type of rendering ?

January 16th, 2010 at 5:17 am

Okay, I have no idea what any of that meant, but if it makes Durian even better…go for it!! 🙂

January 16th, 2010 at 8:57 am

Disk Caching Please don’t forget the lessons of the vanish proxy. http://varnish.projects.linpro.no/ . Don’t fight with the OS. Most OS’s are good at managing virtual memory. If anything use more mem mapping of files so reducing data to be sent to swapfile. Most OS’s will auto dump mapped files with need.

Squid is a classic case of Disk Caching fighting with OS Caching. Swapfile’s are not fast. Having to have items recovered from swap to be transfered to disk cache completely undermines the objects of discaching.

Mem mapping prevents items in the Disk Cache entering the swapfile so removing the risk of double slow drive event.

Basically we need a memory mapping of files management system. Clearing a memory map will not touch swap. Adding a memory map will not touch swap. So double handling event causing lag ie transfer from swap to disk or disk to swap is impossible. Lowest IO possible is important. Mapping can achieve this.

January 16th, 2010 at 2:48 pm

can anyone update de gallery please?

January 17th, 2010 at 1:35 am

So…

Is Sintel going to be Stereo 3-D? Please make it so…

January 17th, 2010 at 11:01 am

@Alaa El-din

Thanks for the headsup buddy 🙂 will take a look into it . 🙂 .

January 18th, 2010 at 8:22 pm

@francoistarlier: no particular improvements related to glsl or realtime rendering are planned.

@rogper, if you think it’s a bug, report it in the tracker. Z-buffer resolution is an issue at some point though, if you make things very small or large.

@Gianmichele, no, that is not part of the plan.

@MTracer, no stereo 3D at the moment, only when we get a sponsor for this.

@oiaohm, not sure what the practical implications of your suggestions are, a web proxy is quite a different beast than a rendering engine?

January 19th, 2010 at 11:33 am

Ok, so you are Brecht, cheers 😉

Please, keep us informed about the progress, this is so good and exciting to have such features in Blender!

January 20th, 2010 at 5:10 am

this changes in the rendering system are going to change something on the baking system?

January 20th, 2010 at 7:45 pm

I think this is the right time to make the render pass customisable and more like professionnal renderer, so my compositor will stop hatin’ on me 😀

If you can make it it would be awesome and everyone could use blender in big pipeline projects.