Memory & JeMalloc

on April 15th, 2010, by ideasman42While memory is a dry topic, many of you would have experienced Blender grind to a halt (or worse), with big files the workstation can’t handle.

Yesterday Beorn was having trouble loading a scene which was taking a lot of memory, After concluding it wasn’t a memory leak in blender I looked into why Blender would show 75mb in use while the system monitor shows over 700mb.

It turns out is because the operating system cant always efficiently deal with applications memory usage and ends up using a lot more ram.

So I tested jemalloc, an opensource drop-in replacement for the operating systems memory allocation calls, used by firefox, facebook and freeBSD according to their site.

I was surprised to find memory usage went down by up to 1gig in some cases, without noticeable slowdown, using less memory in almost every case.

It can also decrease render times in cases where the system starts to use virtual memory.

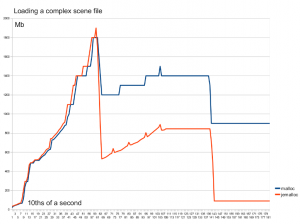

The first example loads a complex scene, and then a blank file, notice there is over 600mb difference.

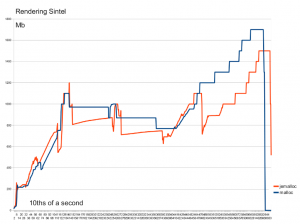

The second graph shows rendering the sintel model, I’d like to have made a few more examples but don’t have much time right now.

While testing we found Blender exposed a bug in jemalloc’s thread-cache, Jason Evans was kind enough to look into the problem for us, fixing it the next day in bugfix version 1.01.

JeMalloc is now used on every workstation, if all goes well we may include this with Blender as Firefox does.

For more info see: http://www.canonware.com/jemalloc/

– Campbell

Notes…

- Hoard was also tested, but it didn’t improve memory usage all that much.

- Edited, now ‘decrease rendertimes’.

- Comparing against the default allocator in Linux (modified ptmalloc), one could argue the problems is because of bad memory usage within Blender, from what I have read the default malloc in linux is quite good. Nevertheless it may prove to be an advantage for us.

- For anyone who wants to test on *nix, you don’t need to rebuild Blender, just pre-load:

LD_PRELOAD=/usr/lib/libjemalloc.so blender.bin

April 15th, 2010 at 3:15 am

Second graph is broken (Error 404)… 🙁

April 15th, 2010 at 3:17 am

It should be /wp-content/uploads/2010/04/jemalloc_render.png

April 15th, 2010 at 3:20 am

Great job, Campbell! That’s a cool find. (FYI, your second graphic is not showing)

BTW, what is the configuration of the workstations being used to develop Durian?

April 15th, 2010 at 3:33 am

Stupid system memory allocators. JeMalloc sounds quite impressive. 😀 Awesome discovery, Campbell!

April 15th, 2010 at 3:56 am

Way to go, cutting down on sloppy memory usage is a plus for everyone!!!!!!!!! Two thumbs up!!!

April 15th, 2010 at 4:09 am

“It can also increases render times in cases where the system starts to use virtual memory.”

🙁

I hope you meant: decrease

🙂

April 15th, 2010 at 4:19 am

Nice but on which OS are you comparing those 2 allocators? Linux?

I know the Hoard allocator is also very efficient on multithreaded multi processors systems. It is also available for Windows and Solaris. Worth a try, expecially on Windows:

http://www.hoard.org

April 15th, 2010 at 4:54 am

@david, unless I’m interpreting it wrong,

according to the graph he really meant increase… :/

April 15th, 2010 at 4:58 am

…or just install freeBSD on all the boxen.

April 15th, 2010 at 5:14 am

@Felix and David:

It depends on how you interpret it, as it does increase efficiency of memory, hence it decreases the actual use of memory! 😉

April 15th, 2010 at 5:42 am

Very neat. Sounds like yet another way to raise the ceiling and make room for the big dragon. Viva la render speed!

April 15th, 2010 at 6:58 am

Great job!!!

April 15th, 2010 at 9:09 am

” if all goes well we may include this with Blender as Firefox does.”

why not? if it fixes loading big scenes.

April 15th, 2010 at 9:21 am

Good news. I can’t render new Sintel.blend on 4Gb RAM, may be now I can.

April 15th, 2010 at 10:16 am

@John, Didn’t post about this yet but we’re using much the same setup as with bigbuckbunny. blender compiled locally on each PC, desktop icons point to a shell script on the server for building and running. So we can change how blender starts/builds from one place, making it easy to switch allocators.

@Felix, Its hard to say if this slows things down much without running more tests (time consuming), To be fair we should also compare on Windows & Mac to see if there is much difference. For now I’m satisfied its at worst slightly slower and at best can save the system from running out of ram :).

@Anonymous Coward, sure, but thats a lot of work to setup all these PC’s with BSD, switching the allocator for Blender is trivial.

April 15th, 2010 at 10:30 am

Stupid system memory allocators. JeMalloc sounds quite impressive. 😀 Good stuff, Campbell.

April 15th, 2010 at 11:58 am

Woow, great Campbell! 🙂

What do you think of Eskil’s approach who avoids linked lists of small elements and favors arrays (is a hybrid approach with linked list of big arrays instead of standard linked list feasible with blender structure?) to avoid data fragmentation and keep memory aligned for SIMD-purposes and avoid cache-misses? Indeed, even if jemalloc is way better, there’s still a lof of memory “lost” (blender still uses 800 Mb when the blank file is loaded)? (I’m not a programmer, so sorry if this sounds stupids.. I know that one of ansi C array drawback is that they cannot be allocated on the fly, but say that there’s a defined array size in blender, say allocation of 1 Mb chunks, and arrays connected by linked list.. a new memory manager…

http://news.quelsolaar.com/ (I am adding and subtracting)

If I remember, Yves Poissant has said that cache misses are responsibles of many slow-down in render engines, mostly caused by fragmented memory, but would this implies a full, mammoth rewrite? (no compatible with the tight Durian schedule)

Regards

April 15th, 2010 at 2:27 pm

Sorry, I’ve misinterpreted the figure! (about the moment when the blank file is loaded), so I remove my statement about the lost memory…

Regards

April 15th, 2010 at 6:04 pm

Memory allocation is not dry! You take that back!

Seriously, the combination of hard computer science and 3D art is wonderful. I would love to see some more ‘technical’ posts here. Keep up the great work!

April 15th, 2010 at 6:37 pm

Hi guys!

I have tried it here, the machine is a Notebook Turion 64 X2 with 2 Mb of RAM and it didn’t make any difference here.

I have tried with Blender 2.49 and 2.5 alpha2.

I’ll be at home in a few hours and I’ll try with one of my models and I’ll post the results here.

[]’s,

Still

April 15th, 2010 at 7:44 pm

@ruddy, havn’t done benchmarks with this personally but believe your correct. Vertex Groups are one of the main offenders for small allocations. With rendering I think there is more to it then using arrays, we already use array chunks for faces but theres no one correct way to solve this since it depends how the data is accessed in each case, a ray-tree could be optimized group near faces in memory, image tiles may also help.

@RockyMtnMesh, knew there were some of you lurking out there, otherwise Id not have posted 🙂

@Still, to really test this well we would need more configurations. Of course if users can test this is great, what OS are you running ,32/64bit?, how did you test jemalloc?.

April 15th, 2010 at 9:04 pm

The sponsor page mentions that you will be building your own renderfarm. Has that begun?

April 15th, 2010 at 10:13 pm

Great job i’m currently working on a very large city scene thats starting to slow my machine down a little. this is something i’ve really been hoping to have looked at.

Something i noticed between 2.5 beta an new 2.5 builds is that memory usage is up 3 times higher than it was before

April 15th, 2010 at 10:25 pm

Good work!!

April 15th, 2010 at 11:01 pm

@Jan de Vries, expect some news about this soon 🙂

@Zane Runner, this is worrying, is this blenders readout of the memory or from the operating system? Blender can print out its memory usage (SpaceBar, Memory Statistics). This could help you find the cause of the memory usage. Otherwise if blenders readout in the header is very different from the operating systems, this could be because of memory fragmentation.

April 15th, 2010 at 11:09 pm

hey, great!, there some patch about memory, page faults and cold start up time enhancements here:

http://blog.mozilla.com/tglek/

Good work!

April 15th, 2010 at 11:16 pm

i never looked at the system just noticed the readout on the top header in blender next to vert and face count.

i’ll download the latest 2.5 build i can get for windows 64 bit and see/compare

April 15th, 2010 at 11:36 pm

nevermind can’t find alpha 0 anymore and alpha 2 uses just as much memory as the latest

April 15th, 2010 at 11:37 pm

I agree with Rocky 100%. I just bought another 2GB of ram because blender is a bit of a pig. This could really help…

😀

MTracer.

April 16th, 2010 at 4:26 am

Awesome, posts like this are interesting, keep them coming.

Ram usage is something Ive been hoping to optimize for a while now, ever since playing around with the BBB production files which always crashed Blender when rendering due to Ram, so this certainly looks good.

It would be great to hear how well this goes for Blender on Windows however.

April 16th, 2010 at 12:12 pm

Great! I have been following blender from the early days and each time is getting better and better. Great job.

April 16th, 2010 at 2:11 pm

I am a windows user and I am looking forward to making big files with the 64bit workstation i am getting this summer for graduation so improving ram usage would be greatly appreciated I cant figure out how to use these ram optimizers so if you plan to use them please code them into blender so non programing artists like me can reap the benefits too

April 16th, 2010 at 9:17 pm

I don’t know how they rendered for previous projects. But I hope they are going consider “parallel computing” when they start Final Rendering Process!

April 17th, 2010 at 3:48 am

This is something I like to see – information on how Durian is benefiting Blender development.

ideasman: Small allocations are the devil, to quote Mama Boucher (of ‘Water Boy’ fame), both in terms of memory fragmentation & the resulting-side-effect of memory requirements.

Whilst the jemalloc solution is a good one, and probably should be kept even if it is a tad slower (as I read it), the issue with looking at things from a “global perspective” as opposed to the way memory is allocated for each part is that you can only “fiddle around the edges”. If, for example, the Vertex Groups are making too many small allocations, the best solution is to refactor the way memory is allocated for it’s use or the data structures it uses. In C++, memory allocation can be tweaked per structure (through custom new/delete operators); but in stand-alone C I acknowledge this would be more difficult without strict adherence to allocation functions/macros.

And yes, I am aware this is all “hand-waving” & academic given the real situation is that you needed a quick, global solution to the problem to get Durian back on track. I commend you for finding the solution the way you did (I have a bad habit at looking into the micro problems before considering macro solutions). In fact, changing the underlying allocator would be one of the LAST things I’d do – wasting alot of time in the process. Perfectionism often loses out against practical realism on the ground!

April 17th, 2010 at 7:14 pm

WOW. Just tried running with jemalloc. Sculpting is much much smoother experience…

April 18th, 2010 at 7:35 am

@B.Tolputt

“This is something I like to see – information on how Durian is benefiting Blender development.”

You might want to read the about section, especially the technical targets, take particular note of the last one mentioned:

Technical targets

* High detail multi-res modeling (sculpting) and render (micropolygons?)

* Fire/smoke/volumetrics & explosions

* Compositing using tiles/regions, so it becomes resolution independent

* Crowd/massive simulation (fix animation system to allow duplicates)

* Improve library system for managing complex projects

* Deliver in 4k digital cinema (depending agreement with sponsor)

* Make the Blender 2.5x series fully production ready.

April 18th, 2010 at 2:14 pm

* Crowd/massive simulation (fix animation system to allow duplicates)

Oooh, me like this 🙂

April 19th, 2010 at 3:28 am

@ideasman42 Sorry about the info. My system is a Debian AMD64, Turion X2, 1.9MHz, 2Gb RAM, 1Gb swap. I have just tested like that LD_PRELOAD=/usr/lib/libjemalloc.so blender.bin

I’m going to test it in a complex scene here with this notebook and with my desktop Phenon X3, 2.3MHz, 3.5Gb RAM, 1Gb swap, and I’ll post the results.

Anyway, there is another way to test it?

[]’s,

Still

April 19th, 2010 at 4:24 am

For windows systems, i think Doug Lea’s malloc can be pretty good. Don’t know about linux systems tho.

Link is http://g.oswego.edu/ , is under the “software” category.

April 19th, 2010 at 4:33 am

i have nearly forgotten, nedmalloc can also be good for testing…

http://www.nedprod.com/programs/portable/nedmalloc/

April 19th, 2010 at 11:44 am

@bruno, thanks for the link, looks very usefil. I was aware of some of these tools but didn’t try them yet, bookmarked and will look into this this later.

@Alex, its tricky, sometimes cutting edge graphics + running on a 3+ year old system are mutually exclusive 🙁

@matty, thats the idea, include it works out of the box.

@K-Linux, nor sure what you mean by parallel computing exactly, having each PC rendering a single frame is simple and efficient.

@B.Tolputt, yep, ideally blender wouldn’t make so many small allocations, but fixing this isnt trivial like swapping out the allocator. Needs someone with some time and good test cases to research, find solutions and address the bottlenecks. At the moment I don’t have this much free time.

@Still, no, this seems fine.

@j, tried this a while ago when testing speed where none of the replacements made much difference, since jemalloc is very popular I didn’t try all the others. If users want to test other malloc replacements and report on how they go, I wont complain 🙂

April 20th, 2010 at 6:25 am

Tried out tcmalloc as well. Less wasted memory than ptmalloc2 but not quite as small as jemalloc. Seems faster speed-wise though. And offers a lot of control at runtime via environment variables.

I think what makes the noticeable difference for sculpting was that there is probably a lot less swapping out the CPU cache than with the standard ptmalloc2. Just a guess though, no data to back it up.

April 22nd, 2010 at 9:56 am

You should really give NedMalloc a try.

Of course it all depends on your workload, but on the long run, I’ve found that NedMalloc was often a winner over TC and JE, both speed- and memory-wise, on Linux systems.